- Contact The DPO Centre

- +44 (0)203 797 1289

- hello@dpocentre.com

AI and GDPR compliance

July 10, 2023

Anonymisation Part 2: Risk Reduction for CROs, Sponsors & Partners Conducting Clinical Trials

July 24, 2023Anonymisation Part 1: challenges & considerations for life sciences

Introduction & anonymisation techniques

Effective anonymisation is an issue for many organisations, however the processA series of actions or steps taken in order to achieve a particular end. remains a crucial tool in safeguarding privacy rights and ensuring UK and EU GDPRRegulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the protection of natural persons regarding the processing of personal data and on the free movement of such data (General Data Protection Regulation). compliance. Here, we examine the challenges of using anonymisation techniques and the considerations needed to assess any limitations.

Anonymisation is the process of removing personal identifiers from data in order to protect the privacy of individuals. For life sciences, this is an important technique and helps organisations make full use of data for research and innovation, while reducing obligations under data protection regulations.

It could be argued that anonymisation is the cornerstone of ethical research, although this is a rather crude overview. Research relies upon identifiable data*, as there is often a need to link back to a particular individual. However, anonymisation is crucial to enabling further or secondary research to be undertaken using an existing dataset. An organisation should therefore consider the most appropriate anonymisation technique in terms of compliance, taking into account any ongoing and future requirements. There are two principal ways in which anonymisation can be achieved:

- Generalisation: Personal dataInformation which relates to an identified or identifiable natural person. is aggregated to a level that groups individuals within a demographic to reduce the risk of re-identification of an individual data subjectAn individual who can be identified or is identifiable from data..

- Data Masking: Personal data is replaced with random strings of numbers and letters to mask the existing data.

Using these and other anonymising techniques fortifies regulatory compliance, as well as helping to safeguard against data misuse and assisting with the consistency of results. If a robust process is implemented, this will not only avoid some of the more highly regulated activities that arise from using personal data, but also help to build trust and commercial opportunities.

The limitations of anonymisation

Life sciences organisations often deal with highly specific and detailed personal data, which can make effective anonymisation particularly challenging. In some projects, it may be essential to hold certain identifiers, making true anonymisation impossible.

Outliers & anomalies

Where data is linked to outliers or anomalies, knowing certain identifying aspects about a patient could be crucial, but also problematic. For example, knowing about a patient in an age demographic of 30-40 with dementia might be essential for research advances, but would create an issue for the anonymisation process.

These types of cases often involve rare conditions or groups from demographics, and the data is much needed for progress within the scope of the research study. If these patients are excluded from the dataset, it may well impact the effectiveness of the trial, and affect the utility of that data for ongoing research.

ConsentAn unambiguous, informed and freely given indication by an individual agreeing to their personal data being processed. & onwards use

Most studies obtain an individual’s consent for their data to be used in a specific way, for a specific purpose and specified period. However, it must be noted that consent is often not the lawful basis for processing that is relied upon under data protection legislation – a complicated area, and lawful bases are to be discussed in additional blogs. Practically, in terms of individual consent for a clinical trial, this means the data gathered may be limited to a particular scoped study and not necessarily available for any further usage.

Issues arise when ongoing or future studies need to use or share a dataset. Aside from any legal concerns, this raises ethical questions around the extent to which anonymisedAnonymised refers to data that has undergone a process of transformation to remove or alter personal data in such a way that individuals can no longer be identified from it, and it is impossible for that process to be reversed and the data to be re-identified. Anonymised data is considered non-personal and falls outside the scope of the GDPR. data can be used outside the agreed scope of study. This is a crucial factor that can affect the availability and utility of data even after anonymisation and should be considered within the parameters of a risk & compatibility assessment, as well as the need for further approval of relevant ethics committees or similar.

Differences for various jurisdictions

Organisations need to also be aware of the broad exemptions available in some territories but not others. Different jurisdictions have varying data protection regulations, which can complicate the process of anonymisation for studies operating across borders.

Some jurisdictions allow exemptions for research purposes and allow the processing of personal data (data that has not been anonymised) without consent. Other jurisdictions have much stricter requirements that do not allow for such processing.

It is essential to check the legal requirements in all jurisdictions connected with the study and assess each territory’s requirements for compliance.

Assessing the extent of anonymisation

Anonymisation could be reframed as an exercise in risk management, much like the general aspects of data protection. But how can the risks posed by a dataset be assessed, and how can we accurately determine whether it has been successfully anonymised?

Reasonable likelihood test

For organisations just beginning their privacy journey, it may be beneficial to begin with this test.

To outline a little history: The 1995 EU Data Protection Directive first introduced the concept of “reasonably likely” when considering the risks of re-identification. Now, it is more commonly known as the reasonable likelihood test.

Unfortunately, it is not possible to obtain an absolute guarantee that an individual will never be identified from a particular datatset, particularly in the life sciences, where highly specific data is often collected. However, it is a legal responsibility of an organisation to ensure all reasonable attempts have been made to limit the risks.

The considerations for this test concern the methods that are “reasonably likely” to be used by an “intruder” or an “insider”. Sounds simple, but it can be difficult to pin down the methods that are reasonably likely to be used, especially with questions of time and costs, and the technology available. In short, this test has limited value and can end up being a circular argument.

Motivated intruderA motivated intruder is a person who starts without any prior knowledge but wishes to identify an individual from whose personal data the anonymous information is derived. test

More developed organisations would do well to consider using the concept of a “motivated intruder”. This test was introduced in the 2012 UK ICOThe United Kingdom’s independent supervisory authority for ensuring compliance with the UK GDPR, Data Protection Act 2018, the Privacy and Electronic Communications Regulations etc. Anonymisation guidance and refers to an external individual who does not possess a specialist skillset (i.e. not a hacker, but a reasonably switched-on person).

A motivated intruder would have access to the internet and other publicly available datasets and, for whatever reason, is sufficiently determined to re-identify a particular anonymised dataset.

The risk assessment of this test involves reviewing each “data release” (e.g. public release, third party sharing, etc.), and evaluating whether an imagined motivated intruder could re-identify a given individual.

This exercise highlights individual risks and allows foresight, however the factors are obviously marred by subjectivity. As with all tests, there are limitations.

K-anonymityA technique used to release person-specific data such that the ability to link to other information using the quasi-identifier is limited. K-anonymity achieves this through suppression of identifiers and output perturbation.

For a more objective approach, organisations often use the k-anonymity method for quantifying risk. This can be likened to a “needle in a haystack” strategy and is a popular technique to facilitate data privacy concerns.

K-anonymity is a technique whereby individuals’ data is pooled into a much larger group to suppress identifiers. Also called data generalisation, this method is a useful tool which can also be used for sharing de-sensitised data.

The k-value defines the effectiveness of anonymity. To assess this value, the number of records with identical attributes in an anonymised dataset need to be counted. A k-value of 1 means the record is unique, and the data is likely not anonymous. If any given two records are identical, the dataset has a k-value of 2, and so on. Where k is high, it can be assumed the risk of individual re-identification is low.

Sounds straightforward, however, there can be anomalies or outliers, so-called fringe cases that might need diverging k-values. By definition, these cases are uncommon or unique, but these demonstrate the difficulties that can arise. This is also highlighted by the Singaporean Supervisory AuthorityAn authority established by its member state to supervise the compliance of data protection regulation., the Personal Data Protection Commission, (PDPC), who suggests a k-value of 3 is appropriate for internal data sharing, while 5 is recommended for external cases.

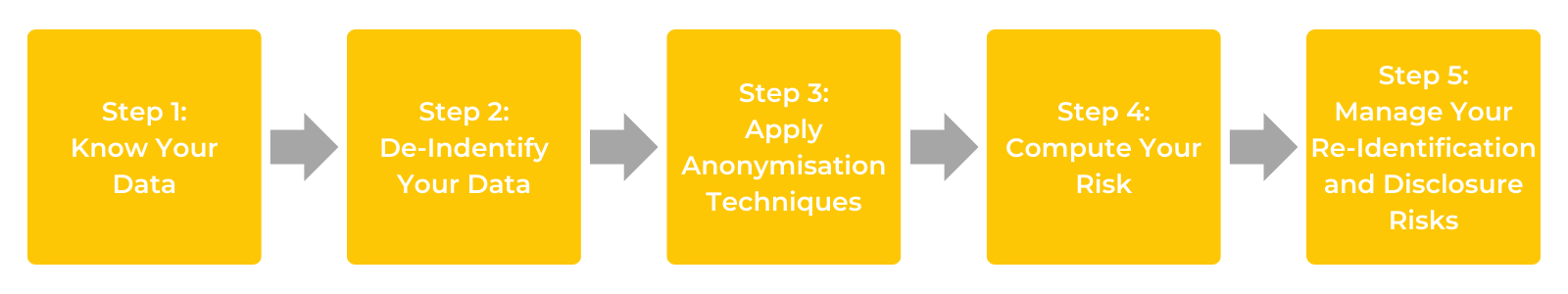

Singapore Supervisory Authority 5 step process

In March 2022, the PDPC published a five-step process which organisations can use to perform anonymisation.

Figure 1: PDPC 5-step anonymisation process

This mature approach incorporates all the previous methodologies:

Step 1: Know your data – be aware of the data attributes and the aspects of identifiability

Step 2: De-identify your data – remove identifiers and apply pseudonyms

Step 3: Apply anonymisation techniques – e.g. data masking, k-anonymity etc

Step 4: Compute your risks – assess using tests such as the motivated intruder test

Step 5: Manage your re-identification and disclosure risks

The key innovation of this 5-step process is how the trajectory is not limited to the actual act of anonymisation. Both before and after implementing a process, organisations need to conduct dataflow mapping, undertake risk assessments and implement appropriate technical and organisational measures.

These controls are common to most developed compliance frameworks, and the PDPC’s five step process offers a useful tool to complement and enhance any existing practices. Organisations should apply robust processes to assist clinical trial operations while maintaining resilient data protection.

Data that cannot be anonymised

As mentioned earlier, with the outliers and anomalies, there are certain data studies that cannot be anonymised. For these projects, other measures should be considered, and these include:

- Implementing access controlsA series of measures (either technical or physical) which allow personal data to be accessed on a need-to-know basis. to locations where personal data is stored

- Regularly reviewing and updating information security practices

- Providing regular training to employees and ensuring staff are suitably resourced

- Adopting encryption to ensure personal data is protected in storage and transit

Working to reduce the data protection risks

To conclude, Contract Research Organisations (CROs), sponsors, and where applicable clinical research sites, all have an important role to play in data protection that extends beyond the application of appropriate pseudonymisation and anonymisation techniques. They need to remain informed of any legislative changes to ensure compliance, in addition to keeping abreast of any new risk reduction techniques that may arise in the future.

Ultimately, the only way for life science organisations to reduce risks is to implement robust technical and organisational measures as well as having clear and concise agreements in place.

The contracts between sponsors, CROs and partners must establish contractual responsibilities, in the minimum, for all of these areas:

- How personal data will be protected, including anonymisation obligations

- Data transfers

- Procedures for responding to data subjects exercising their rights

- Processes for deleting data the end of the study.

By focussing on collaboration and risk reduction, life sciences organisations can effectively navigate data protection challenges as well as promoting innovation and compliance.

*It should be noted as per Case T-557/20, SRB v EDPS, the General Court of the European Union ruled that the pseudonymised data SRB disclosed to a third party could be considered anonymous. The onward ramifications of this judgement for the wider data protection industry are still percolating, however within the context of clinical trials, there are persuasive arguments in delaying any drastic operational model redesigns at this time. The DPO Centre will be monitoring the situation closely. Clients will be provided with expert advice for any necessary adjustments, as and when needed and appropriate.

Looking for the right support for life sciences data protection?

The DPO Centre offers flexible and tailored data protection support with professional advice and expertise specific to life sciences organisations. We provide outsourced data protection officers (DPOs) who work as an integral member of your team, as well as Data Protection Representatives (DPR) in both the EU and UK.

We will help you to quantify your anonymisation risks and support you with your wider data protection obligations, both before and after your trial begins. Our DPOs and EU/UK Representatives assist with privacy maturity reviews, Data Protection Impact Assessments (DPIAs), and dataflow mapping exercises. We review protocols, policies, Privacy Notices, Informed Consent Forms, and other clinical trial documentation, as required. We will also advise in greater detail as to which datasets may be subject to applicable data retentionData retention refers to the period for which records are kept and when they should be destroyed. Under the General Data Protection Regulation (GDPR), data retention is a key element of the storage limitation principle, which states that personal data must not be kept for longer than necessary for the purposes for which the personal data are processed. periods, and therefore require specific anonymisation and archival techniques to be applied.

Need more information? See our full range of outsourced Data Protection support services

For more news and insights about The DPO Centre, follow us on LinkedIn

With thanks to James Boyle from Mishcon de Reya for content contributions. Discover more about risk reduction in the next blog, Anonymisation Part 2: Risk Reduction for CROs, Sponsors & Partners Conducting Clinical Trials.