Artificial intelligenceThe use of computer systems to perform tasks normally requiring human intelligence, such as decision-making, speech recognition, translation etc. is rapidly reshaping the way criminals conduct cyberattacks. In this blog, we examine how AI is making social engineering harder to detect. We look at the impact on high-value industries and share practical steps organisations can take to reduce their risk.

AI tools now allow attackers to launch scams at scale, scrape personal details for precision targeting, and impersonate executives with convincing deepfakes. The result is a surge in attacks that are harder to spot and carry greater financial, regulatory, and reputational consequences.

We explore each of these areas in more detail below:

What is social engineering?

Social engineering is a form of criminal manipulation where attackers exploit human psychology rather than hacking into technical systems. Instead of breaking through software flaws, the attackers gather information and build trust to create a false sense of familiarity or legitimacy. This deception allows attackers to trick victims into revealing confidential information or granting access to protected systems.

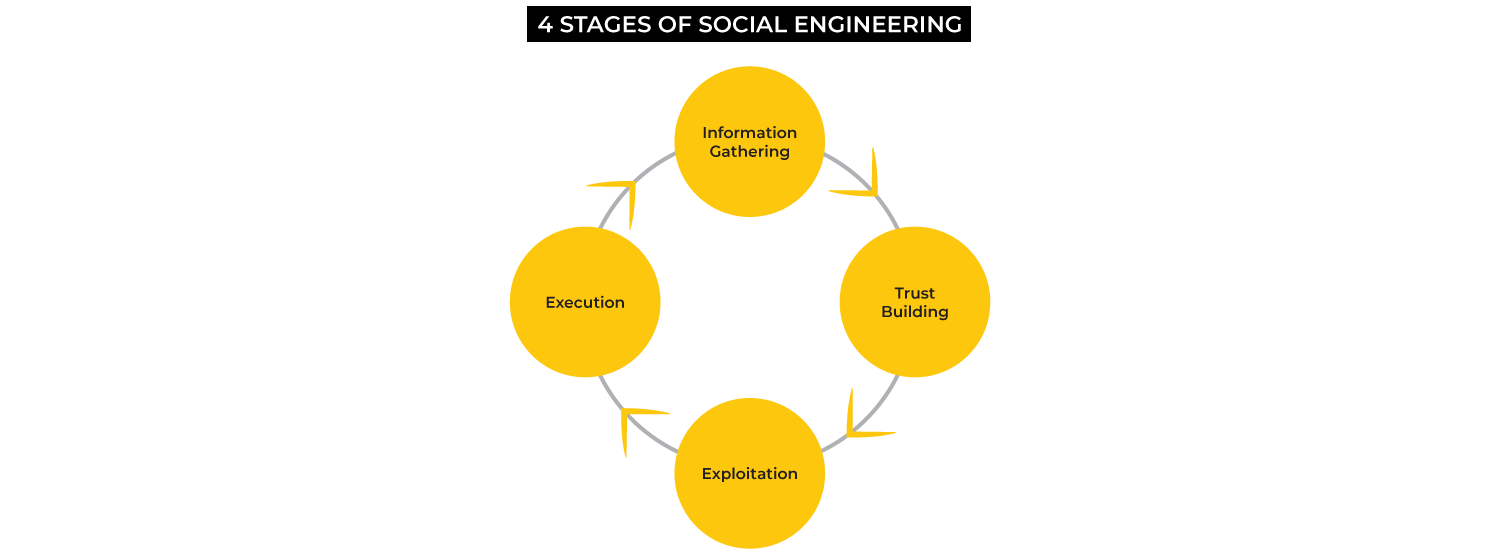

Most attacks follow four key stages, each designed to exploit human behaviour and our natural tendency to trust and help others.

At every stage, social engineers use psychological levers like authority, urgency, and curiosity. The resulting attack feels less like a hack and more like a conversation, which is why it is so dangerous. For senior leaders, the concern is that these attacks often succeed despite significant investment in cybersecurity tools.

The 4 stages of social engineering attacks

Attackers collect details about employees, suppliers, and executives to craft credible approaches. This could be as simple as scanning LinkedIn for job titles, browsing company websites for upcoming events, or noting email address formats. They may even trawl social media for personal details like birthdays, hobbies, or family members. Some even dig deeper through the dark web or discarded paperwork to uncover sensitive data.

Example: An attacker sees on LinkedIn that a company has just hired several new staff. Knowing from the website that IT support uses a standard email format, they prepare a convincing message, posing as the helpdesk.

Tip: Regularly remind new employees during onboarding that IT support will never request passwords or personal dataInformation which relates to an identified or identifiable natural person. via email. Reinforce this with the wider workforce so attackers can’t exploit uncertainty.

Armed with information, attackers pose as colleagues, vendors, or authority figures. This can take many forms, including pretexting (pretending to be a supplier or regulator) or baiting (providing genuine resources to win confidence). Attackers may connect on LinkedIn, follow up on an event invitation, or impersonate technical support to gain credibility.

Example: The attacker phones an employee, posing as an IT support technician. They reference the recent new hires and explain they’re rolling out a password reset for all accounts to fix access issues.

Tip: Encourage employees to verify unexpected communications, especially those requesting action, through a second channel. This could mean calling a known number, checking credentials via an internal directory, or using pre-agreed passphrases for sensitive requests.

Once trust is established, the attacker makes a small request that lowers defences. This often comes through phishingA type of scam where attackers try to deceive people into revealing sensitive information or installing malicious malware. Phishing attacks are most commonly delivered by email. or spear phishing emails (designed to trick recipients into clicking malicious links, downloading attachments, or entering login details) or via vishing and smishing (voice or text scams) that add urgency and pressure. These requests can quickly escalate into much larger compromises.

Example: The ‘technician’ emails the employee a link to a spoofed login page and asks them to quickly enter their username and password to test the system.

Tip: Train employees to inspect emails with a critical eye. Check the sender’s address for subtle misspellings, hover over links to preview destinations, and question any message that pushes urgency or secrecy.

Finally, the attacker cashes in. This can mean stealing data, installing malware, or most often, committing financial fraud.

Example: With the stolen credentials, the attacker gains access to the company’s internal systems, downloads confidential files, and uses the compromised account to launch further phishing emails from inside the organisation.

Tip: Segment access rights so that even if one account is compromised, attackers can’t move freely across systems. Apply the principle of least privilege and regularly audit who has access to what.

How is AI making social engineering harder to detect?

AI hasn’t invented new forms of social engineering: phishing, impersonation, and fraudulent requests remain the core tactics. What has changed is their scale, speed, and believability. According to Tech News, AI-enabled cyberattacks rose by 47% globally in 2025. A single convincing message — a fake invoice, regulator request, or executive impersonation — can now bypass traditional defences and cause financial, regulatory, and reputational damage.

For senior leaders, this shift means social engineering is no longer just an IT problem, but a strategic business risk that requires board-level attention. The following examples show how AI is reshaping familiar scams into far more dangerous threats:

Polished phishing: Grammatically flawless, context aware, AI-generated emails and messages that mimic a company’s tone. They remove traditional red flags like spelling mistakes or generic greetings.

Deepfake impersonation: AI powered voice and video cloning that convincingly mimics executives, colleagues, or regulators. These deepfakes are often used in high-pressure scenarios like urgent payments or data requests.

Automated reconnaissance: AI-driven scraping of social media, websites, and public records to build detailed victim profiles, enabling highly tailored and convincing messages.

Adaptive scams: AI chatbots that hold real-time conversations and adjust responses to any doubt or hesitation, which makes scams feel natural rather than scripted.

Scale and speed: AI can enable thousands of personalised scams at once, each slightly different, overwhelming users and outpacing traditional security tools.

How to defend your organisation against social engineering attacks

Defending against AI-fuelled breach threats requires more than a single solution. Organisations need a layered approach that combines people, technology, and clear processes.

1. Strengthen staff awareness

Deliver regular training on social engineering tactics and show how attackers exploit urgency, authority, and fear. Promote a ‘pause and verify’ culture where employees feel confident double-checking unusual requests, even those appearing to come from senior colleagues.

2. Run phishing simulations

Test staff readiness with realistic drills to help them recognise and respond to suspicious messages in a safe environment.

3. Deploy intelligent email filtering

Use advanced filters to block spoofed domains, malicious attachments, and suspicious links before they reach inboxes. Pair with anomaly detection to flag unusual activity at scale.

4. Require multi-factor authentication (MFA)

Mandate phishing-resistant MFA on all accounts to prevent breaches, even if credentials are compromised.

5. Update policies regularly

Keep cybersecurity and data handling policies aligned with evolving threats. Maintain an incident response plan that clearly sets out how to contain, investigate, and communicate during an attack.

6. Reduce the attack surface

Limit unnecessary staff and corporate details on public sites and encourage employees to tighten privacy settings on professional networks and social media.

AI is transforming social engineering into a faster, more scalable, and more convincing threat. A single phishing email or deepfake call can result in severe financial losses, regulatory penalties, and lasting reputational harm. High-value sectors such as Finance, Life Sciences, and Technology are already experiencing these consequences.

Traditional security tools on their own are no longer enough. A layered defence is essential, combining staff training, clear policies, and advanced technical safeguardsWhen transferring personal data to a third country, organisations must put in place appropriate safeguards to ensure the protection of personal data. Organisations should ensure that data subjects' rights will be respected and that the data subject has access to redress if they don't, and that the GDPR principles will be adhered to whilst the personal data is in the..., whilst also limiting the organisation’s digital footprint. By embedding awareness and resilience across people, processes, and technology, organisations can reduce their exposure and maintain the trust of customers, regulators, and stakeholders.

If your company would benefit from expert data protection advice and guidance, please .

Fill in your details below and we’ll get back to you as soon as possible

What is social engineering?